Synthesising evidence

Careful and methodical synthesis of the evidence is required

Synthesising evidence

Guidelines should ideally be informed by at least one well-conducted systematic review. In some cases, guideline developers may also consider overviews of multiple systematic reviews, or may incorporate individual studies and other sources of evidence where reviews are not available or feasible.

Once you have assembled the body of evidence from the literature searches, a careful synthesis of the evidence is required to assist the guideline development group to make decisions about the evidence. Evidence synthesis is one step in the systematic review process, beginning with the detailed and systematic collection of data from the included studies or reviews, including descriptive information and outcome data. You may be conducting the systematic review yourself, or commissioning a research team to complete this work on behalf of the guideline development group. In either case, the team completing the review, including the synthesis step, will require expertise to select and apply appropriate methods, including input from statisticians where appropriate. This will ensure the synthesis provides a sound basis for the guideline’s recommendations.

A good evidence synthesis does not simply collate and list the findings of relevant studies. It also aims to:

- be comprehensive

- use systematic and scientific methods and be clear about how decisions were made

- evaluate the certainty of the research evidence

- convey the overall conclusions that can validly be drawn from the body of evidence.

A guideline not informed by a comprehensive evidence synthesis can result in inappropriate recommendations. In addition, if the evidence synthesis does not convey the level of certainty about the evidence, inappropriate recommendations can also be made.

This module outlines the elements of good practice that should be incorporated into any synthesis of the evidence. Guidance on other steps in the process is provided in other modules (see the Forming the questions, Deciding what evidence to include, Identifying the evidence, Assessing risk of bias, Assessing certainty of evidence and Evidence to decision modules).

What to do

1. Plan the synthesis

As with all elements of research, the synthesis methods should be planned in advance as part of a systematic review protocol (NICE 2014; WHO 2014). Clear and detailed planning will avoid some of the major pitfalls in guideline development — such as ensuring that the evidence identified is appropriate to the purpose; and that the work required can be adequately prioritised and resourced.

Consider the purpose of the guideline and questions your evidence will answer — see the Forming the questions module. This notes that if the right questions are not clearly defined at the beginning of the process, your evidence search and subsequent results will be impacted. This means you may not have a complete understanding of the evidence when the guideline development group comes together to consider its recommendations.

Forming your questions should give you clear guidance on the priorities for evidence synthesis, including the comparisons to be made, the types of evidence you will be working with and the outcomes to be measured. Some additional questions that you may want to consider at an early stage when planning your systematic review include:

- Will you be combining different types of evidence, such as qualitative and quantitative evidence, or human and animal research?

- Is your guideline broad, and likely to consider overviews or multiple existing systematic reviews?

- Will you be updating existing reviews or conducting reviews from scratch?

- Are the interventions, exposures and populations complex and likely to generate very diverse, or heterogeneous studies?

- Are there specific features you would like to investigate, such as potential confounders; characteristics of the population, for example, children versus adults, high- versus low-income settings; or variations in the intervention, which you would like to investigate?

- Are there multiple interventions that you would like to be able to rank, assess or compare?

- Will there be a lot of data to work with or is the evidence likely to be sparse?

- Are any populations differentially affected by the health condition?

It is important to plan in advance the comparisons required to answer your questions; for example, between multiple tests or intervention options, or against control populations. This may seem obvious, but where there are likely to be multiple, complex variations between the included studies, you can avoid considerable difficulty by having clear categories for intervention or exposure types (Lewin, Hendry et al. 2017). When planning the purpose and scope of the guideline, consider mapping logic models or other frameworks to the proposed synthesis.

You will also need to select and define the most meaningful outcomes that will be used to answer your questions. In addition, you should attempt to pre-specify any factors likely to modify the effect of your intervention or exposure of interest, such as potential confounders or causes of heterogeneity (see the Forming the questions module).

As far as possible, specify in advance the techniques and methods you will use — these include statistical techniques, summary statistics or narrative and qualitative synthesis methods. Considering these factors in advance will reduce the risk of inadvertently introducing bias into the process. It will also ensure that appropriate methods are selected and that they are applied consistently and fairly across all the evidence. Additionally, it will help prevent any retrospective decisions about statistical methods or the inclusion of studies, especially after the study results are known. Bear in mind that it is almost certain that your planned approach will need to be adapted during the synthesis process — this can be managed by transparently identifying and justifying changes to the methods in the technical report.

There may be cases where conducting new or updated systematic reviews is not feasible, such as very broad guidelines addressing a wide range of clinical questions, for example, the Australian Clinical guidelines for stroke management. In some cases the available time and resources only allow for a rapid review approach or the adoption/adaptation of an existing guideline. It is important to realise that you can undertake different approaches for different parts of the review. For example, the main clinical or policy questions of the guideline may be addressed by systematic reviews. Additional supplementary information such as the consideration of consumer values and preferences may use other approaches to gather evidence. Wherever processes other than complete systematic reviews are used, aim to make the process as rigorous and methodologically sound as possible and minimise the risks of bias that can be introduced. A transparent description of the methods used and a rationale should be presented (Tricco, Langlois et al. 2017). A potential flexible framework for undertaking a rapid review, designed by Plüddemann et al (2018) outlines the importance of a clearly formulated clinical research question as well as a clear rationale for the needs of research to inform decision-making.

Your protocol should include plans for all the steps included in this module. If you are undertaking a systematic review, you should also consider registering your systematic review protocol(s) on the international prospective register of systematic reviews (PROSPERO) to avoid duplication of effort and to keep a record for future protocol comparisons and evaluations. If you are commissioning a review through an organisation such as Cochrane, the title will be registered with them and subsequently published in the Cochrane Library.

2. Synthesise the evidence

Synthesising the body of evidence is a critical part of the systematic review process. It begins with the detailed and systematic collection of data from the included studies or reviews, including descriptive information and outcome data (see the Identifying the evidence module). Descriptive information can be summarised in a detailed evidence report accompanying the guideline — this can include a description of the methods, population, intervention/exposure and setting of each study or review. Summarising these key characteristics in comparative summary tables, for example, grouping studies by settings or intervention categories can be helpful for guideline readers to navigate the evidence. It is important to provide enough detail to allow end users — including guideline developers reading commissioned reviews — to assess the comparability of the available evidence to their own context. While this module does not focus on synthesising the evidence of economic analyses, resources such as the Consolidated Health Economic Evaluation Reporting Standards (CHEERS) can be used to help in this activity (Husereau, Drummond et al. 2013).

The Agency for Healthcare Research and Quality (AHRQ) has produced a Methods guide for medical test reviews as a practical resource for investigators interested in conducting systematic reviews. The AHRQ has also developed a Methods guide for effectiveness and comparative effectiveness reviews (CERs)

Table 1 outlines the key sources of guidance for different types of evidence synthesis. Different methods will be appropriate for different types of questions and different types of evidence. Further details on two overarching categories — statistical and non-statistical (that is, narrative or qualitative synthesis) — are outlined in the sections below.

2.1. Statistical synthesis methods (meta-analysis)

Once the descriptive information is synthesised narratively, it may be appropriate to undertake a meta-analysis. A meta-analysis involves using statistical techniques to quantitatively combine the results of multiple studies, thereby drawing conclusions about the body of evidence. A weighted average of the effect estimates from the individual studies that have been included is calculated, with weights usually derived from the precision of each study. That is, studies with more precise estimates or larger sample sizes will usually receive the most weight.

A meta-analysis is very flexible and can be used to combine the results of ‘like’ studies that are the same in their design. In a meta-analysis, these results are summarised as a single statistic (Deeks, Higgins et al. 2017); and results are usually presented in a summary plot, such as a forest plot for prevalence or intervention effects, or a receiver operator characteristic (ROC) curve for diagnostic test accuracy studies.

The goals of a meta-analysis are to:

- obtain a quantitative estimate of size and direction of the effect or estimate

- increase the precision of the effect estimate by combining the sample sizes of multiple smaller studies

- answer questions that cannot be answered by individual studies, such as examining the causes for differences or heterogeneity between studies

- resolve controversies that arise when study results conflict (Deeks, Higgins et al. 2017).

Studies must be combined using methods appropriate for the data you are using. There are many statistical approaches available; and advice from an experienced statistician or methodologist will be important to assist in making these choices.

A meta-analysis may not be appropriate if:

- the available studies are too heterogeneous, or not similar enough to each other, for example, in a population, setting, intervention/exposure, outcome measurement; or where average estimate will not be meaningful

- the studies are at such a high risk of bias that the overall estimate will also be biased (see the Assessing risk of bias module)

- you do not have all or most of the relevant studies, or relevant outcome measures are unavailable due to reporting biases.

If the studies are not similar enough or the risk of bias or inconsistency is high, it may be better not to proceed with a meta-analysis and to opt instead for alternative synthesis methods, such as narrative synthesis (see Section 2.2) (Deeks, Higgins et al. 2017). Alternative synthesis methods are discussed in more detail in the next section.

Guidance on how to perform the meta-analysis and which type of meta-analysis is appropriate — such as random or fixed models — can be found in the Cochrane Handbook. Additionally, while Cochrane recommends not using the random effects model when studies with small samples are included in the meta-analysis, using a fixed effects model (which will be affected less) is also likely to be inappropriate. Recent analyses of the ‘small study effect’ in meta-analyses of interventions has found that including studies with small samples will overestimate treatment effects (Turner, Bird et al. 2013; Zhang, Xu et al. 2013; Papageorgiou, Antonoglou et al. 2014).

If your guideline is considering existing systematic reviews you may decide to re-analyse their data — to include recent studies, to use statistical methods more consistent with other available reviews or to meet current methodological standards (Garner, Hopewell et al. 2016). Whether this is possible may depend on the level of detail that is reported in the existing review or otherwise available by contacting its authors.

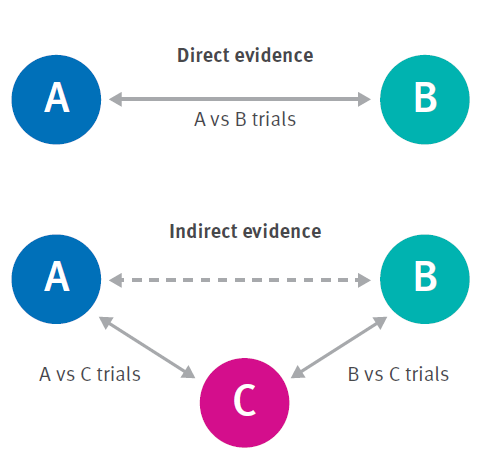

More complex approaches to a meta-analysis exist, including multivariate meta-analysis, in which multiple outcomes can be analysed simultaneously; and network meta-analysis (NMA) through which multiple interventions for a condition can be simultaneously compared and ranked. NMA constructs a network of trials of related interventions using both direct comparisons (comparing two options within a study) and indirect comparisons (comparing two options from different studies that each used a third, common comparator — see Figure 1). However, NMA models require many assumptions about the comparability and consistency of direct and indirect effects across studies. These are only applicable in some circumstances, and so NMA is not always an appropriate method (Riley, Jackson et al. 2017). NMA is most commonly found in reviews of interventions with a high degree of consistency in the biological effects of interventions across studies and populations, for example, comparisons of multiple drugs for a specific condition. In the absence of an NMA the committee will have to compare multiple options in some other way to reach recommendations as described in Section 2.2.

2.2. Alternative synthesis methods

If statistical synthesis is not appropriate — such as reviews of evidence that are qualitative, heterogeneous or not in the right format to make a summary possible — then alternative and non-statistical synthesis methods can be used to describe and explore the results in a structured and systematic way (Pluye and Hong 2014).

Alternative and non-statistical synthesis methods of quantitative data should be planned, structured and conducted with similar considerations to a statistical approach. For example, environmental health guidelines such as water quality guidelines will use narrative reviews of the literature and water quality monitoring data. Without sufficient planning, ad-hoc discussion of the available results can lead to biased presentation of the evidence. It can also lead to the unstructured presentation of large volumes of evidence that can overwhelm readers and lead them to draw inappropriate conclusions. There are numerous approaches available to present and explore the results without meta-analysis, which should be selected and planned in advance (Popay, Roberts et al. 2006).

Structure the synthesis with reference to the review’s logic model or theoretical framework, including pre-planned comparisons and outcome measures (see the Forming the questions module). You can then present the results from the included studies, explore relationships between the results and draw conclusions about the overall direction, size and certainty of the findings. Ensure you consider and document any potential weakness of this synthesis approach. This can include the potential for bias, the inability to statistically weight effect measures and the presence of uncertainty arising from the lack of clear or detailed information about the included studies.

Overviews of reviews are likely to include alternative, rather than statistical synthesis, particularly where the overview includes only one systematic review relevant to each question within its scope. Issues specific to the analysis of overviews include transparency in the selection of reviews; assessing the match between the scope of the review and the scope of the overview; and the extent of overlap of primary studies across the reviews. In some cases, a new statistical analysis may be conducted using the review data, for example, to increase the comparability of results or to focus on specific subgroups (Pollock, Fernandes et al. 2016).

Where appropriate, synthesis of qualitative evidence requires different techniques and may focus on a summative approach to draw together the findings of multiple studies. It may also require a more in-depth analysis based on one of several qualitative methodologies to draw new conclusions and inform further theoretical development (Harden, Thomas et al. 2018).

Some guideline developers must find ways to integrate evidence of very different types in order to fully address their questions, for example, reviews:

- integrating qualitative evidence with quantitative evidence for example, implementation or acceptability with effectiveness (Harden, Thomas et al. 2018)

- for which both randomised and non-randomised study designs are available and may provide complementary evidence — such as where non-randomised studies can provide more widely applicable evidence to the population or intervention of interest (Schünemann, Tugwell et al. 2013)

- of environmental exposures which may draw on in vitro, animal, toxicological and epidemiological studies to obtain a complete picture of effects of exposure to a contaminant (Woodruff and Sutton 2011; Woodruff and Sutton 2014).

It would be inappropriate to combine such diverse sources of evidence statistically, so a careful approach is needed to synthesise each body of evidence separately. You can then investigate the extent to which they complement or complicate each other’s conclusions.

3. Investigate the reasons for different effects

One of the key objectives for evidence synthesis is to explore the reasons for different observed effects and to identify any populations or intervention/exposure categories that are associated with these differences. This can be a critical area of investigation used to inform the guideline’s recommendations to support specific actions, for different populations. It is especially relevant to considerations of equity (see the Equity module).

If you are able to use meta-analysis, the reasons for heterogeneity can be investigated using statistical methods such as subgroup analyses and meta-regression. Subgroup analyses can be used to compare results between different participant groups, settings or variations on the intervention or exposure. Subgroup analyses are rarely based on randomised comparisons — even within randomised trials — and are usually observational by nature. As the number of subgroup comparisons increases, so does the likelihood of false positive results. Subgroup analyses should therefore be undertaken only on a limited number of characteristics that have been flagged at the protocol planning stage as the most likely to influence the effect. The results should always be interpreted with caution (Deeks, Higgins et al. 2017).

Meta-regression is one alternative technique that can assess the relationship between the treatment effects and multiple characteristics of interest. In addition, there are statistical models for meta-analysis that incorporate observed heterogeneity in the calculation of the effect estimate, for example, a random-effects meta-analysis. However, it is important to note that using these models does not reduce or explain the heterogeneity (Deeks, Higgins et al. 2017).

If you are using alternative synthesis methods or non-statistical methods — including qualitative synthesis — heterogeneity can still be assessed through a careful and planned comparison of effects between studies. This can be based on the similar categorisation of populations, settings or interventions as those used in subgroup analysis or meta-regression. Further guidance on approaching this kind of investigation is available elsewhere (Popay, Roberts et al. 2006).

4. Report the synthesis findings

A detailed technical report should be prepared, including:

- all methods used for synthesis and GRADE assessment — including those pre-specified in the protocol, any subsequent amendments or additional methods and a rationale for any changes

- a description of all the included studies or reviews

- an assessment of risk of bias in the included studies or reviews

- the results of all individual studies or reviews

- the risk of bias for each study or review

- the synthesised findings, including meta-analyses and narrative or qualitative syntheses

- any outcomes pre-specified as important but for which no evidence was found

- any other identified gaps in the evidence.

This detailed report will first be presented to the guideline development group to inform their deliberations and recommendations. It should also be made publicly available alongside the final guideline.

Additionally, the results of the synthesis should be provided in summary formats. For the guideline development group a summary ‘Evidence Profile’ can be presented for each key question (Guyatt, Oxman et al. 2011; GRADE Working Group 2013). This will present the results for each key outcome, in both relative and absolute terms, alongside your assessment of the certainty of the available evidence (see the Assessing certainty of evidence module). Summaries of these results can also be made available in the final guideline for example, the Australian Clinical Guidelines for Stroke Management 2017).

An example of a section from an evaluation report is provided below, to illustrate a summary format (Table 2, immediately below).

| Outcomes | Illustrative comparative risks* (95% CI) | No of participants (studies) | Quality of the evidence (GRADE)1 | Comments |

|---|---|---|---|---|

| Caries in mixed dentition | Non-significant reduction in caries in one study in infants and children aged 3–12 years Non-significant inverse association between dmft/DMFT and water fluoridation in children aged 6 to 11 years | 4,784 (2 observational studies) | ⨁◯◯◯ | One study from Australia and another from Canada in the context of CWF. Downgraded for imprecision. |

| Caries incidence in mixed dentition | Non-significant inverse association between incidence of cavitated and non-cavitated caries in mixed dentition and water fluoridation (aged 3 to 13 years). | 154 (1 observational study) | ⨁◯◯◯ | A single study from the US using Iowa Fluoride Study data. Downgraded for indirectness and imprecision. |

Note: Key to GRADE quality of evidence: ⨁⨁⨁⨁ = We are very confident in the reported associations; ⨁⨁⨁◯ = We are moderately confident in the reported associations; ⨁⨁◯◯ = Our confidence in the reported associations is limited; ⨁◯◯◯ = We are not confident about the reported associations.

Abbreviations: dmft/s = number of decayed, missing and filled deciduous teeth/surfaces; dft = number of decayed and filled deciduous teeth; DMFT/S = number of decayed, missing and filled permanent teeth/surfaces; CWF = community water fluoridation; CI = confidence interval; US = United States

You should report both the evidence and descriptions of methods in enough detail to allow others to appraise the guideline. This will allow future guideline developers to understand your approach when deciding whether to update, adopt or adapt your guideline (see the Adopt, adapt or start from scratch module).

NHMRC Standards

The following standards apply to the Synthesising evidence module:

2. To be transparent guidelines will make publicly available:

2.1. The details of all processes and procedures used to develop the guideline

2.2. The source evidence.

6. To be evidence informed guidelines will:

6.1. Be informed by well conducted systematic reviews

6.2. Consider the body of evidence for each outcome (including the quality of that evidence) and other factors that influence the process of making recommendations including benefits and harms, values and preferences, resource use and acceptability.

Guidelines approved by NHMRC must meet the requirements outlined in the Procedures and requirements for meeting the NHMRC standard.

Useful resources

Cochrane Handbook for Systematic Reviews of Interventions

Developing NICE Guidelines: The Manual

Narrative Synthesis in Systematic Reviews guidance, University of Lancaster(Popay, Roberts et al. 2006)

Glossary

Evidence synthesis: Includes, but is not restricted to, systematic reviews. Findings from research using a wide range of designs including randomised controlled trials, observational studies, designs that produce economic and qualitative data may all need to be combined to inform judgements on effectiveness, cost-effectiveness, appropriateness and feasibility (Popay, Roberts et al. 2006).

Meta-analysis: the use of statistical techniques to integrate the results of included studies. Sometimes misused as a synonym for systematic reviews, where the review includes a meta-analysis.

Mixed studies review: also known as integrative reviews. A variation of a systematic reviews that combines data from qualitative, quantitative and mixed methods studies (Pluye and Hong 2014).

Narrative review: a term used to describe more traditional literature reviews that are typically not systematic or transparent in their approach to synthesis (Popay, Roberts et al. 2006). These reviews generally do not have a description of how studies were selected; study quality is generally not assessed, but they can provide some general information about a topic.

Narrative synthesis: synthesis of findings from multiple studies that relies primarily on the use of words and text to summarise and explain the findings of the synthesis (Popay, Roberts et al. 2006)

Narrative systematic review: an approach involving the systematic search, identification and inclusion of studies (i.e. a systematic review) with a narrative synthesis of the findings.

Systematic review: a review of a clearly formulated question that uses systematic and explicit methods to identify, select, and critically appraise relevant research, and to collect and analyse data from the studies that are included in the review. Statistical methods (meta-analysis) may or may not be used to analyse and summarise the results of the included studies Cochrane Glossary).

References

Deeks, J. J., Higgins, J. P. T., et al. (2017). Chapter 9: Analysing data and undertaking meta-analyses. Cochrane Handbook for Systematic Reviews of Interventions. J. Higgins, R. Churchill, J. Chandler and M. Cumpston, version 5.2.0 (updated June 2017), Cochrane. Available from www.training.cochrane.org/handbook.

Plüddemann A,. Aronson J.K., Onakpoya I., et al. (2018). Redefining rapid reviews: a flexible framework for restricted systematic reviews BMJ Evidence-Based Medicine 2018;23:201-203.

Shea B.J., Reeves B.C., et al. (2017). AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017 Sep 21;358:j4008

Acknowledgements

NHMRC would like to acknowledge and thank Professor Brigid Gillespie (author) and Professor Lukman Thalib (author) from Griffith University, Miranda Cumpston (author) and Professor Wendy Chaboyer (editor) from Griffith University for their contributions to this module.

Version 5.1. Last updated 6 September 2019.

Suggested citation: NHMRC. Guidelines for Guidelines: Synthesising evidence. https://nhmrc.gov.au/guidelinesforguidelines/develop/synthesising-evidence. Last published 6 September 2019.