Transcript for the Ideas Grants peer reviewer Q&A webinar. Recorded on Tuesday, 16 July 2024 1:00 pm - 3:00 pm (AEST).

Introduction and overview

Glover, Julie 0:56

Good afternoon, everyone and thank you all for making the time to attend the 2024 Ideas Grants question and answer webinar. My name is Dr Julie Glover and I'm the Executive Director of the Research Foundations branch, the branch that Ideas Grant Scheme is delivered from. Just before we start, I'd like to acknowledge the traditional lands on which we're meeting. I'm on the Ngunnawal country here in Canberra, but I know that we're on traditional lands across Australia. I'd like to pay my respects to elders, past, present and emerging, as well as pay my respects to Aboriginal and Torres Strait Islander peer reviewers who are attending our meeting today.

A very big thank you for all of you who are attending this as well as for our Peer Review Mentors.

As for the work that you're about to do, we could not do this without you and really appreciate your contributions. I'm very pleased to introduce and hand over to Dr Dev Sinha who leads the Ideas Grant Scheme and who will take us through some key information for peer reviewers today. Thanks Dev.

Sinha, Dev 3:08

Thank you, Julie, and a very good afternoon to everyone here today. I would also like to extend my thanks to every single one of you for taking the time to join the webinar today.

I'd like to acknowledge that this is an immense undertaking on your time and your expertise. We remain very grateful for your continued involvement with the Ideas Grant Scheme.

Today's session is not just a briefing. We hope that you've already had the chance to familiarise yourself with the assessment criteria and score descriptors for Ideas Grants. Our aim today is to make this more interactive by engaging with you on a couple of focus areas, particularly in relation to writing and reviewing comments. So with that in mind, I will try to keep the briefing part of the webinar really short and focussed.

I will take a few moments to discuss some general aspects of the scheme and the peer review process, talk a bit about reviewing comments and how to make best use of the budget comments section and explain the role of Peer Review Mentors during the assessment process and introduce our 2024 PRMs.

Our PRMs have kindly agreed to help out with answering questions today and then also advise you during the discussion modules that we have planned for today.

The first discussion module is on the comment sharing process. We will show you a few examples of anonymised comments, with the aim of discussing what issues you could potentially raise about these comments, with your secretariat. We anticipate that this will generate some valuable discussion around comment writing and then also allow our PRMs to advise you on how they approach common writing as well. We will then move on to a second interactive module, a quiz using Menti. We'll ask you whether or not you consider the comments in the quiz appropriate or sufficient. Again, the idea here is to generate some conversation on common writing and hopefully provide you with some examples of what really good comments look like, and also what to avoid when you're writing them yourselves, as you assess applications this year. At the end of the two interactive modules, we'll have some time for Q&A session, and this is your opportunity to seek advice from PRMs on any aspect of the Ideas Grants peer review process.

I'll start off with some feedback from the 2023 Ideas Grants Peer Review Survey, which we've tried our best to action this year. Firstly, as you might have already noted, if you reviewed with us last year our webinar this year is longer with focused and interactive modules on comment writing, which was identified as a training focus area in the survey. We continue to try very hard each year to reduce your workload and just for a comparison purpose, I thought using some numbers here would be helpful. As an example, the maximum workload for Ideas Grant review was 30 applications per assessor in 2020, reducing to 25 applications in 2021 and 2022, and we've been able to decrease this further to between 15 and 18 in 2023 and 2024.

A few Sapphire processes have been streamlined to make things a bit easier. You will be able to write comments and scores directly into Sapphire and it will save all your work until you press ‘submit’. For budgets we now have a ‘yes/no’ screen which I'll get into a bit more detail when we get to the budget comments slides. Also, we have listened to you and this year the peer review period does not overlap with school holidays in any of our states and territories.

Before we go into the details of this scheme, you're reminded of the importance of confidentiality and privacy of NHMRC peer review processes. All information contained in an application is regarded as confidential unless otherwise indicated. You are undertaking an important professional role with legal obligations, and we enforce these through the Deed of Confidentiality that you signed and it is a reminder here again that the Deed of Confidentiality is actually a lifetime commitment.

We also ask that you please familiarise yourself with NHMRC’s position on the use of generative AI tools for peer review. Information provided to generative AI systems such as natural language processing models and other artificial intelligence tools like ChatGPT, may become part of a public database which may be accessed by unspecified third parties. Therefore, we ask the peer reviewers not to input any part of a grant application or any information from a grant application into a generative AI tool, as this would be a breach of your confidentiality undertaking. NHMRC will continue to monitor the rapid pace of development in generative AI and will update our policy as technologies and risks keep changing in this space.

Overview of peer review process

A quick overview of the round this year. Applications to Ideas Grants closed on 15th May and we received 2261 applications. The COI process is now complete. Thank you very much to each and every one of you for getting through that stage so quickly. At the moment we're in the final stages of allocation of application.

We aim to start assessments on 22nd July. Each application is being assigned to five reviewers for assessment wherever possible. We've also aimed to allocate no more than 18 applications for review to each peer reviewer. However, with late COIs and withdrawals, we may need to ask you to assess one or two more at a later stage. Huge thanks from us in advance to those who take on more review work for us at those later stages, it's extremely helpful.

I'm not going to go through each step of the timeline that's on display here, but as you can see from the general overview, it is really important to complete your application within the given time frame so that our outcomes can be delivered to applicants in a timely manner before the end of the year.

So what else is new in 2024? In response to feedback received from Research Offices requesting increased visibility of researchers who are participating in peer review from their institutions, NHMRC is piloting a new communication strategy for the 2024 round of the Ideas Grant Scheme. This year, we provided your participation details to RAOs at the start of the peer review process, with the idea that an updated list will also be sent at the conclusion of the Ideas peer review phase this year.

The intention is that this will allow RAOs to have better visibility of the involvement of their staff in peer review processes and then also they can help you manage your workload and other institutional responsibilities.

This year, you might have already noticed we are giving you specific dates that we will need your involvement on so you can plan your calendar around that. The caveat here obviously is that these dates will depend on the preceding activity being completed on time. Any extensions and delays of one process has an obvious downstream effect on everything else.

| Dates* | Activity |

|---|---|

| 22 July - 19 August 2024 | Peer reviewers assess applications and submit scores and qualitative feedback against Ideas Grant assessment criteria for each allocated application |

| 3 - 12 September 2024 | Peer reviewers review qualitative feedback provided by other reviewers assessing the same application. NHMRC conducts outlier checks and reviews qualitative feedback (peer reviewers may be contacted during this time for further information or to confirm assessment information). |

* tentative dates

[Additional Resources: Please see your Ideas Grants Peer Review Guidelines and Task 2 Peer Review Support Pack]

Comment Review

I might speed through the next few slides as this will be material that has already been shared with you or will be shared with you in your assessment packs. So first, a quick look at the assessment criteria for Ideas Grants. We will be getting into each of these in a bit more detail when we discuss comments on each criterion in the later sessions that we have planned for you today.

Assessor comments, which is one of the prime areas of focus of this webinar, a reminder that peer reviewers are required to provide constructive qualitative feedback to applicants using factual language as far as possible. A comment is your expert assessment of the application and therefore the feedback that you provide as a peer reviewer is not considered to be the view of NHMRC. Your comments do go to the applicant, so ensure your criticism and comments are constructive and focus on the strengths and weaknesses of the application. If you find a flaw in the proposal, for example, you could explain the implications and possibly identify what the applicant could do better next time. Read the entire application before considering scores for individual criterion. However, when you're writing your comments for individual criteria, they should relate to that criterion only.

A little bit on the comment sharing process. Assessors view the comments provided by others who reviewed the same application. This process allows you to evaluate how your comments compared with those of other assessors of the same applications and hold you accountable to your peers for the comments you provide. To maintain independent peer review, it is not an opportunity to adjust your comments or your scores, but something that can be learned from and help you benchmark and increase the quality of your review.

It is also an opportunity for you to notify us if you notice an error or something inappropriate in the comments. Please let us know and we can follow up with the reviewer that provided those comments.

For the past few years, NHMRC has been conducting an outlier screening check in Ideas Grants to identify scores that differ significantly from other peer reviewers. We use statistical methods recommended by the Peer Review Analysis Committee, (PRAC) to do this job. PRAC was an expert committee that advised the CEO on this issue, and there's more information on PRAC on our website if you're interested.

[Additional Resource: Please refer to the work of the Peer Review Analysis Committee]

Where outlier scores are identified, we will seek clarification from the peer reviewers if required. However, it is worth noting that there may often be valid acceptable reasons for an outlier scores. For example, they may reflect a specific expertise or judgement of the assessor, and therefore outlier scores are not necessarily always incorrect. This is just an extra quality assurance process we have put in place to ensure that the outlier score itself is not a result of an unintentional error or a typographical mistake.

Budget Review

A very quick look at budget review. When you review the application budget, you may notice that a particular budget item like a PSP or a specific research cost is not sufficiently justified by the applicant or is not in line with the proposed research objective. Things that you should be considering here are whether the budget requests align with NHMRC's Direct Research Cost guidelines and whether you think all the requested items are necessary, justified and represent value for money. This year we've made it slightly easier, there is a tick box for you to indicate whether or not you recommend adjustments to the budget. If the budget is reasonable and justified, please tick no and leave the budget comment box blank. If you're suggesting a change to the budget item, tick yes, and only then make it clear and specific recommendation in the budget comment field.

I have an example here of a clear, specific, actionable budget recommendation and an unclear version of the same recommendation that makes it very difficult for us to action. I will give you a couple of seconds to read them on screen.

It is worth noting that budget comments are reviewed by NHMRC research scientists before recommended budget reductions are made, so a clear recommendation actually makes our jobs a whole lot easier.

[The example mentioned is copied below for this transcript:

Correct version: 'The Research Assistant position is not justified at the PSP2 level, a PSP1 would be sufficient to carry out the responsibilities outlined in the proposal. Therefore, I recommended that the PSP2 position be reduced to PSP 1.'

Incorrect version: 'I’m not sure about the need for the PSP 2 Research Assistant position'.]

Finally, just keep your secretariat informed if issues arise or if you require clarification or assistance, or if you wish to identify any eligibility or integrity issues. Seek advice from your Peer Review Mentors wherever required. We will be hosting 2 drop-in sessions with PRMs for you on 6th August and 13th August. We will be sending you more communication about the session shortly after the webinar.

Peer Review Mentors (PRMs)

I'd like to introduce our Peer Review Mentor process for this year.

The PRMs primary role is to provide advice and mentoring during the assessment phase of peer review. They are able to provide advice to peer reviewers on broad questions around effective peer review, and advise you by sharing their own experiences, tips and tricks. Please bear in mind that PRMs do not assess applications themselves and they will not provide advice on scientific merits of individual applications. Please contact your secretariat in the first instance and they will get in touch with a peer review mentor on your behalf.

I'm very pleased to introduce our 2024 PRMs, who are hopefully all with us today. Please welcome Professor Louisa Jorm, Professor Stuart Berzins, Associate Professor Nadeem Kaakoush, and Doctor Nicole Lawrence. I would like to now invite our PRMs to introduce themselves very briefly and share any general tips and ideas they might have before we move on to the more interactive modules.

University of New South Wales

Nadeem Kaakoush 17:16

Good afternoon. I'm Nadeem Kaakoush. I'm an Associate Professor at the School of Biomedical Sciences at the University of NSW. My background is microbiology. My experience with the NHMRC reviewing goes back to the Project Grant scheme in 2011 and I was a GRP early career observer and then with the Ideas Grants scheme, I was on a GRP in 2019 and then I was on the modified review panels in 2020, 2021 and 2022. I was a PRM last year as well. In terms of advice, I would say just review grants the way that you'd want your grants to be reviewed.

The same goes for the comments, write comments the way that you'd like comments be written about your grants, and also at the end of the process, try to implement some form of standardisation in your process so that you when you're reviewing your grants, you're reviewing them all sort of equally, because there's a very big time between the starting point and the end point.

Federation University

Stuart Berzins 18:23

Hi everyone. I'm an immunology researcher. I run a research group at Federation University. I do some basic research and also some clinical type of research as well. I've also been in the system for quite a while. I've been on panels, since the project grant days.

I'd like to repeat to some extent what Nadeem said, which is it's really important to be consistent in how you apply your assessments. It's important that you give the applicants the best chance of giving them a, you know, a very fair assessment. Stick to the category descriptors when you're when you're assessing it. and have a clear idea in your mind about what a four is, what a five is, and what a six is, so that you've got consistency across all of the applications that you assess.

University of Queensland

Nicole Lawrence 19:46

H everyone. I'm Nicole, Senior Research Fellow at the Institute for Molecular Bioscience and I've been reviewing the Ideas Grants, but I've also previously done Development grants and the eAsia Grant Scheme.

My specific advice is read the criteria through really clearly first and then read your applications with purpose, specifically looking for evidence to help you score in each of the sections.

I have an offline document. I don't work directly on the online version and I collect comments against the criteria related to strengths and weaknesses for each one. As I'm going through and then when I get to the end of the process, I go back to my comments in each of their sections and then I formulate my response before submitting anything. I can have a look back through and make sure that my comments match the criteria. When I've done all of that then I will go back online and start entering things in from there.

University of New South Wales

Louisa Jorm

I’m Louisa Jorm. I’m the Director of Big Data Research in Health at University of New South Wales, my background’s in epidemiology, bio statistics, health data science, health services research. I’ve been involved with peer review in many NHMRC grant schemes over the years including the old Project Grants, CREs, Investigator Grants and so on. I’ve often been chair of various grant panels. Obviously the system has now changed, we’re now scoring very much as individuals and I would echo the comments others have made about trying to ensure your own internal consistency in the approach you take across the applications you have to review. But perhaps one thing, or two things I have to say that the others perhaps haven’t said, is to just remember the difference between the Ideas Grant Scheme and other schemes and that particular importance of the innovation and creativity that we are looking for here. For me, this is a big difference in the old Project Grants and this idea that we are looking at different things - maybe transformative in their impact and that we’re less interested in track record but rather, does the team have the capability to deliver this particular grant. The other thing I would say is often you will get a set of grants which you may not be completely familiar with from a scientific perspective. There can be a little bit of a risk as sometimes you are a little bit harsher on the one’s you know better. It may be a lot evident of what some of the weaknesses are for those grants you are familiar with in the content area. So again, it’s that consistency and trying to make sure that you’re fair and noting that there is always going to be differences in your degree of familiarity in some of the applications that you’re looking at. So I’ll perhaps stop there.

Dev Sinha

Thank you very much to all 4 of our PRMs. We are incredibly grateful for your involvement with us through today’s webinar and for the next few weeks as we go through the peer review process for Ideas Grants this year.

This brings us to the first of two interactive sessions that we are very excited to present to you this year. In this next session, we will go through you some examples of comments, and ask you what you could highlight to your secretariat as a potential issue if this comment was shared with you?

I will now pass on to Katie Hotchkis, Assistant Director of the Ideas Grants team at NHMRC, to take us through the next two sessions.

***END OF RECORDING***

[The following live modules are available as a deidentified transcript below rather than a video recording to preserve the anonymity of the peer reviewers participating in them]

Discussion Module – Peer Review Comments

Hotchkis, Katie 24:20

Thank you, Dev. As Dev mentioned, the purpose of this exercise is to highlight the types of issues that you can raise with your secretariat during the comment sharing process. The slide shows some examples of possible concerns, but they are not exhaustive. Rather, they are meant to illustrate the kinds of things you can flag. Instead of going through each point on the slide, let's jump to our first example.

Question 1

What concerns could you raise with your secretariat about the following assessment (Capability):

The CI team regularly publish in very high-quality journals (Nat Med, NEJM, Nat Comm, Cell, etc.) and project builds on these studies and uses approached that the team have very high expertise in. This clearly put the capability in the exceptional category.

I'll give you a few seconds to read the comment and then we'll see if there's any volunteers that want to kick off discussion and share their view on any concerns or anything you notice from these assessment comments that you could raise with your secretariat. This is on the capability score descriptor.

Now that you've read it, are there any peer reviewers that want to kick off discussion?

Peer Reviewer 26:05

I was just going to say I would be concerned that this is based on the quality of the journals and not on the capability or the quality of the research that is produced by the research team. The Journal Quality doesn't necessarily reflect the research quality or the researcher's quality as under the Dora Declaration.

Hotchkis, Katie 27:14

Thank you for those comments. So just to summarise some high level points, the comments do not address the capability criterion adequately. In the capability criterion, you're required to think about access to technical resources, infrastructure, equipment, facilities and if required, those additional support personnel such as your associate investigators, which are necessary for the project. Also consider the balance of integrated expertise, experience and training that's targeted towards all aspects of that proposed research.

Additionally, the comment directly references like what was said, the quality of the journals is a proxy for capability and track record.

So thank you for those contributions. We'll move on to the next one.

Question 2

What concerns could you raise with your secretariat about the following assessment (Capability):

I would like to see a stronger publication and grant track record for stage of career for the CIA. The CIA has not published any of the preliminary data supporting this proposal. However, there are a number of senior researchers supporting the proposed studies in the research team. The budget is appropriate for a four year project.

So this is also a capability criteria. So what concerns could you raise with your secretariat for this one?

Peer Reviewer:

I think the main concern, the first sentence, the grant track record for, stage of the CIA, it's the team, not necessarily just the CIA and the track record shouldn't be a mean to assess the grant, just a visibility of that person to be able to do the grant, not the track record itself. Preliminary data shouldn't be needed either.

Hotchkis, Katie 29:30

Thank you for that. So with the focus on innovation and creativity as a scheme objective for Ideas Grants, preliminary results and pilot studies are not expected for the scheme. Risks should be identified and mitigation measures should be in place for the proposal. The other thing is that there are budget comments included here and as Dev highlighted earlier in the presentation, these should be part of the budget comment box, which in this case should just be a tick box without a comment because there are no adjustments being suggested. There is reference to publication and grant track record which are not part of our assessment criteria. Thank you, everyone for those contributions.

Question 3

What concerns could you raise with your secretariat about the following assessment (Innovation and Creativity):

I am not convinced that this application is creative enough.

Peer Reviewer 30:27

It’s not even specific. It's not saying why the application is not creative enough. So it's not justified.

Hotchkis, Katie 30:39

Perfect. did anyone else have anything else you wanted to add?

Peer Reviewer 30:43

I think it's very vague assessment, you know it's not creative enough like what does that really mean? It is an innovative grant for innovative ideas. We should be much more explicit in our comments when we say when we make these type of assessments.

Hotchkis, Katie 31:01

To summarise, this is an insufficient comment as it doesn't go into any detail of the assessment such as the strengths and weaknesses.

Question 4

What concerns could you raise with your secretariat about the following assessment (Significance):

Nothing is conceptually new here.

Peer Reviewer 31:40

An issue there for me is that it's not really about significance, it's about innovation.

It's also not much detail as well.

Hotchkis, Katie 31:48

We would consider this an insufficient comment. It doesn't draw in the strengths and weaknesses and doesn't address the category descriptors and the flaws of the proposal should have been touched on in this comment to give the applicant meaningful feedback that they could action in the future.

Quiz – Peer Review Comments

Sinha, Dev 32:15

We will now be moving on to a second interactive session on comments. You're going to need your smartphones to be able to scan the Menti QR code. Alternatively, you can also go to menti.com and there will be a code up on screen soon that you can enter to access the quiz. If for whatever reason you can't access it, that's fine. You will still be able to see the results on screen and follow along the discussion, but you will not be able to vote.

We will be providing some examples of comments in this session and we'll ask you to answer the poll questions that follow next.

Hotchkis, Katie 33:21

I will bring up a question and then I'll move on to the polling option where you can vote on whether you think that the comments are appropriate against the criteria.

We'll go through 7 examples. It will give you an opportunity to read the comment and do your vote afterwards.

[Additional commentary by NHMRC: please note that parts of the comments have been anonymised with X, Y denominations]

Comment 1 (Capability)

The team has demonstrated their capability and resources necessary to perform the studies proposed. CIA has a good publication track record.

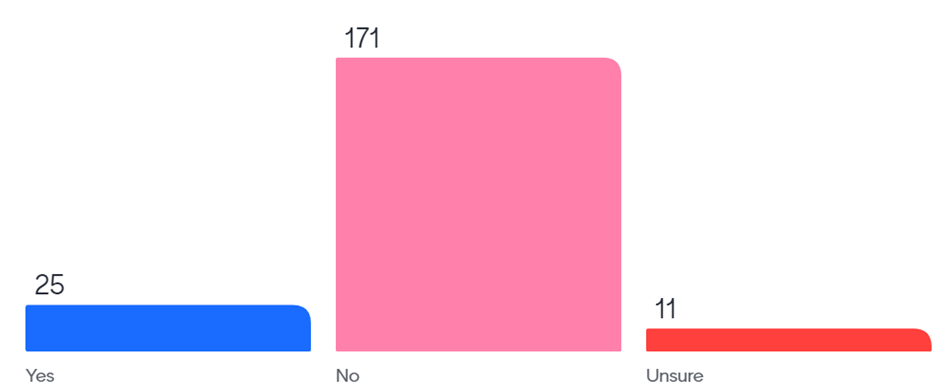

Question 1

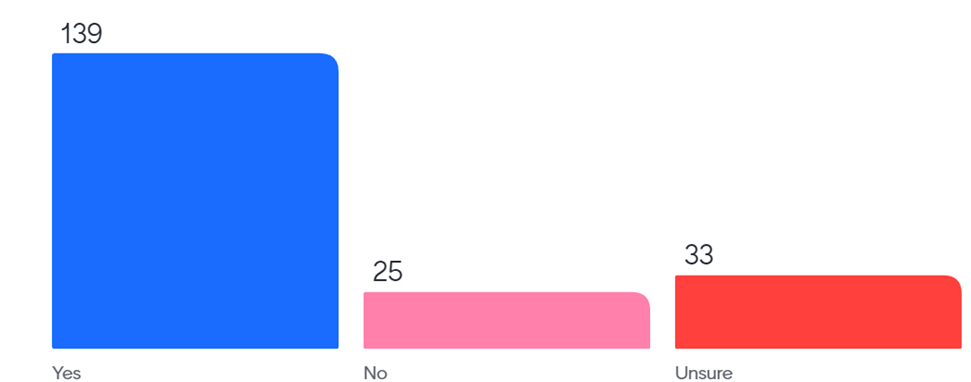

Do you think these comments are appropriate against the 'Capability' assessment criterion?

Discussion summary

This comment simply restates part of the score descriptor. It mentions publication track record which is not part of the assessment criterion. There are no details on the strength and the weaknesses of the application.

Comment 2 (Research Quality)

This is an excellent research plan. It takes three individual treatments which have each been shown to promote 'x outcome' after 'x event', and seeks to determine whether combining all three therapies gives significant added benefit.

There are a few weaknesses:

- Given that males are more likely to suffer from ‘X’, and there are some gender-based differences in recovery from Y, testing the best therapeutic strategy in a groups of male rats would extend the relevance of the work.

- The endpoints and power calculation is unclear. Is the main endpoint a composite of the X test? Based on knowledge of individual treatments, what degree of improvement in a double or triple therapy would be considered a success? No power calculation is provided for the group size.

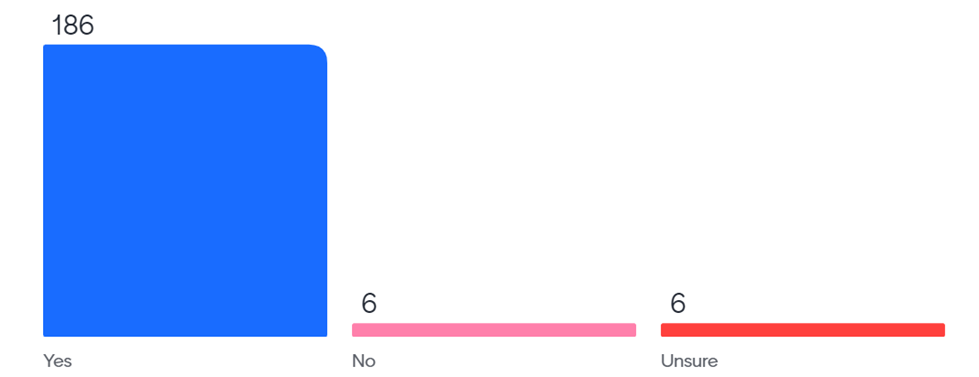

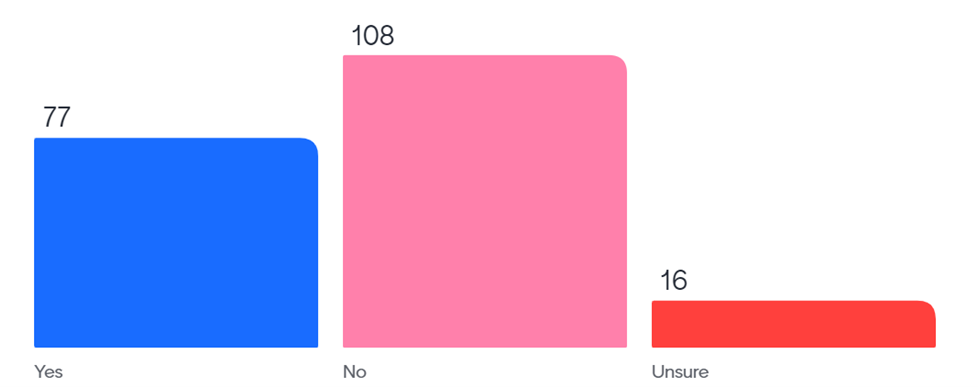

Question

Do you think these comments are appropriate against the 'Research Quality' assessment criterion?

Discussion summary

We would consider this a fantastic example of a comment that describes the strengths and weaknesses of the proposal in an in depth and meaningful way against the Research Quality score descriptor.

Comment 3 (Innovation and Creativity)

The research demonstrates highly innovative methods and project aims, which may result in a substantial shift in the current paradigm or lead to a substantial breakthrough.

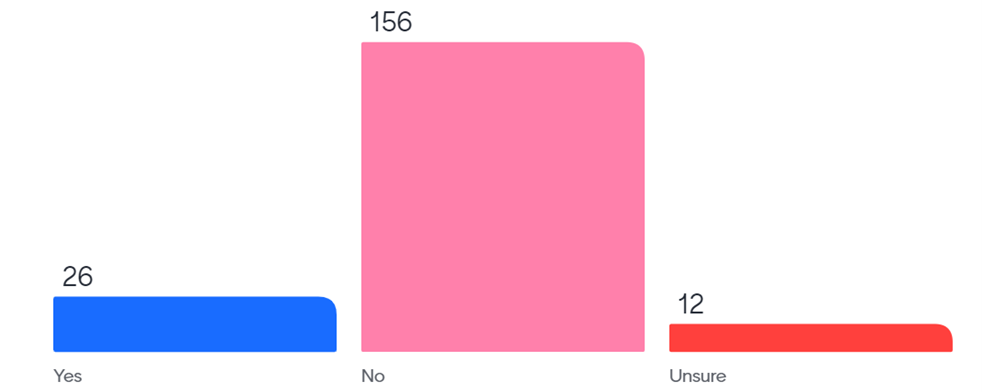

Question 3

Do you think these comments are appropriate against the 'Innovations & Creativity' assessment criterion?

Discussion summary

This comment restates part of the score descriptor and that there are no details on the strengths and the weaknesses of the application against that criterion.

Comment 4 (Research Quality)

The research plan is support by a well justified hypothesis and preliminary data which appears robust, however figure sizes have made it difficult to be convinced.

The aims are focused, well-defined, very coherent and have an excellent study design and approach. The main weakness of this proposal is the reliance on one mouse cell line.

The obvious risk that the mouse cell line do not fully recapitulate human neurology is not addressed. To strengthen this proposal use of X should be included. Furthermore, X have recently been defined as the key drivers of pathology in X disease. Extending this study to other X cells would profoundly improve study design. Overall, this proposal would be competitive with the best, similar research proposals internationally.

Question 4

Do you think these comments are appropriate against the 'Research Quality' assessment criterion?

Discussion summary

This is a great example of a well-articulated, meaningful, constructive criticism review of the application that is within the bounds of the criterion. It talks about the strengths of the application and also includes some weaknesses.

Comment 5 (Capability)

Strength: The team has exceptional capability to complete the proposed project and access to exceptional technical resources. CIA has clearly worked on xx-focused projects. The collaboration between CIs and AIs is established.

Weakness: CIA doesn’t have demonstrated team leadership.

Question 5

Do you think these comments are appropriate against the 'Capability' assessment criterion?

Discussion summary

This is a good example of a comment that describes the strengths and weaknesses of the CI and AI team. It is clear, concise and sticks to the scope of the assessment criterion.

Comment 6 (Research Quality)

Positives:

- The outcomes will generate new knowledge for X issue in disease Y.

- The outcomes will lead to extracting new information using X technology and Y data.

- The outcomes will generate valuable bioinformatic resources for the biomedical research community.

Negatives:

- More details about possible clinical translation would have been beneficial.

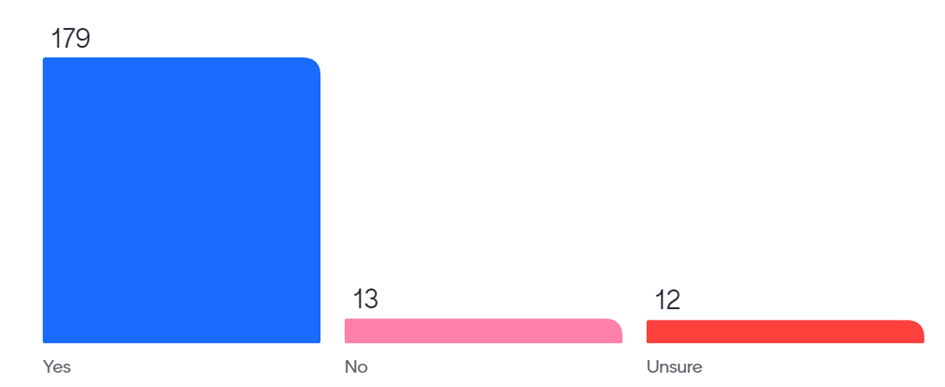

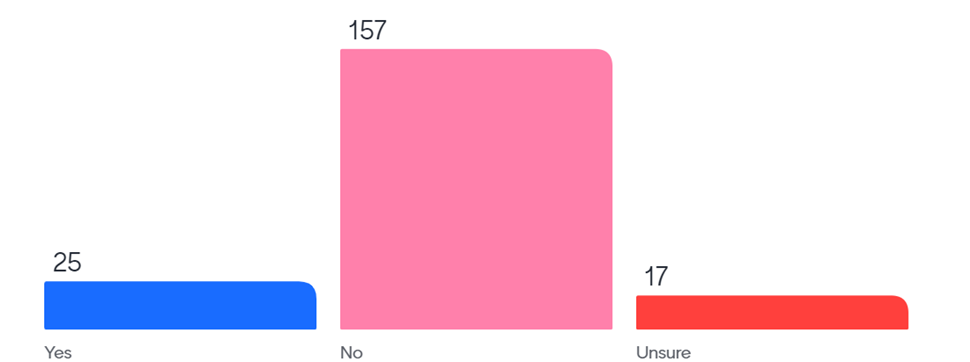

Question 6

Do you think these comments are appropriate against the 'Research Quality' assessment criterion?

Discussion summary

It is an example of a comment that describes the strengths and the weaknesses of the proposal and it is the sort of feedback that applicants are more likely to find helpful.

Comment 7 (Significance)

This is an important project, but targeted at a very small and very specific group of people, as such the impact of the potential outcomes is somewhat limited.

Question 7

Do you think these comments are appropriate against the 'Significance' assessment criterion?

Discussion summary

The assessment of significance does not depend on how small the impacted population is or how rare a condition is. The assessment should be made on whether the outcomes or outputs will result in advancements to, or impacts in, the research area.

Sinha, Dev 41:19

Thank you for attending these last two sessions. We have focused on an area that you have identified as needing more training through your surveys and conversations. I hope you have found it useful.

Before we start the Q&A session, I would like to invite our PRMs to share some general tips on writing comments, such as how they approach them, what pitfalls they avoid, and what they look for when they receive comments.

Do you have any general advice that you can offer to the group?

Stuart Berzins 42:08

A common error when writing comments is to focus too much on one aspect of the grant that stands out to you, either positively or negatively. This can skew your score and make it inconsistent with the category descriptors. You should look at the grant holistically and consider all the relevant criteria. Don't let one element overshadow the rest.

Nicole Lawrence 42:52

I think the answers to question 6 in the quiz shows that the audience was divided because the comments were vague and generic. They repeated the criteria, but they didn't provide specific examples or evidence. A better way to write comments is to collect and write specific feedback that relates to the grant you are reviewing. This will help you score more accurately and give useful feedback to the applicants.

Nadeem Kaakoush 43:40

Instead of asking questions in the comments, which are not helpful since there is no rebuttal process, be more direct and clear about what you liked and what you didn't like in the grant application.

Louisa Jorm 43:56

I’ll also add, try to give feedback that you would like to receive yourself and that can help the applicants improve their grant in the future. Many applicants don't understand why they were rejected and don't know how to make their grant better next time. So, be specific and constructive in your comments and provide suggestions for improvement if possible.

Sinha, Dev 1:55:45

Thank you all for joining us today and I hope this session was useful. This is not the end of the process, so if you have any questions that you couldn't ask today, you can contact secretariat anytime while you are doing the assessment. They will be happy to help you or ask questions to the PRMs on your behalf.

We will also have two more drop-in sessions that we will send you more information about soon. In those sessions, you can also ask us any questions you have. Your secretariat is your first point of contact, but you can also email the Ideas Grants inbox if you need to. Thank you again for your participation. I hope you found the examples of anonymised comments that we showed you today helpful. We will ask for your feedback at the end of the round on the whole process, as well as on this training module and the drop-in sessions with the PRM. Please share your opinions with us. We value your feedback and we update our processes every year based on what you tell us. Please stay engaged in that process as well.

Thank you very much. I just want to check if there are any final words from the PRMs or Julie.

Glover, Julie 1:57:27

I just want to say a big thank you to everyone for the discussion today. Your questions were excellent and they helped us identify what we might need to clarify or expand in the peer review guidelines or FAQ. You have shown that you have read all the documentation and you have a lot of knowledge already. I also want to thank our PRMs for their answers and for sharing their insights on how they do their work. Peer review is very important work and it was great to hear from you how you approach it. Thank you for that. And also thanks to Dev and her team for organising this briefing in a different way and format. I think it worked very well, but as Dev said, we would love to hear your feedback on that too. Thank you very much and please remember that we are here to support you with anything you need while you are doing your peer review. Our Peer Review Mentors are also here to assist you. Thank you.