Table of contents

CEO Communique, February 2021

Key points

For peer review of 2021 Ideas Grants:

- NHMRC will seek five independent assessments for each application

- Reviewers will provide scores and written feedback against each assessment criterion

- Grant Review Panel (GRP) meetings will not be held

- Peer reviewer training will be enhanced.

This communique presents the background and rationale for these changes, in particular:

- Not holding GRP meetings will allow optimal matching of peer reviewers to each application, improving the quality of assessment

- Streamlining the peer review process will reduce the workload on individual reviewers, enable a broader range of reviewers to participate and shorten the time needed for peer review

- These changes will lay the groundwork for new and more flexible approaches to peer review of NHMRC’s major schemes over time, such as multiple cycles per year.

Background

1. History of peer review for NHMRC’s new grant program

NHMRC continues to look for ways to improve peer review, based on our own experience and that of other funding agencies internationally. Peer review by independent experts against published criteria is considered the international gold standard for allocating research grants, but there is no universally agreed best process. Design of peer review processes can reflect a range of factors, including the scope and scale of the grant scheme, assessment criteria, availability of expert reviewers, resources (funds and staff), time available to make decisions and other local or historical conditions.

In 2017-18, after the structure of the new grant program was announced, NHMRC undertook a national consultation on the design of peer review processes for the four new schemes: Investigator, Synergy, Ideas, and Clinical Trials and Cohort Studies Grants. In seeking feedback from the research sector, NHMRC emphasised that the approach to peer review needs to strike a balance between burden on the research community (applicants and reviewers) and the rigour, transparency and fairness of the process. Although responses ranged widely (see Consultation Report), a number of themes emerged in the consultation, of which the following are relevant to this communique:

- the need for appropriate expertise in peer review

- preference for a larger number of independent assessments (minimum 4 or 5 per application)

- broad support for Grant Review Panel (GRP) meetings as a component of the process

- concerns that not all GRPs have appropriate expertise and are over-reliant on the primary spokesperson

- some support for approving the best applications after independent assessment without going to a GRP meeting, provided there were sufficient assessments

- the importance of feedback to applicants

- support for innovative approaches to peer review (such as multiple rounds per year and iterative peer review)

- concerns about the burden on assessors and the number of GRPs needed for the expected large volume of Ideas Grant applications.

Following the consultation and discussions with NHMRC’s Research Committee and other advisory committees, peer review processes for the new schemes were announced in 2018 and rolled out in the first application round in 2019.

2. 2019 Ideas Grants – peer review in the first round

In the first round in 2019, peer review of Ideas Grants was based on GRPs. Applications and reviewers were grouped into 39 discipline-based GRPs. Each application was then reviewed independently by four spokespersons from the GRP. Based on their scores, shortlisted applications were reviewed and rescored at a GRP meeting. Grants were awarded based on final GRP scores.

For the Investigator and Ideas Grant schemes that receive a large volume of applications, NHMRC has used ‘superpanels’ to group peer reviewers with applications based on related research topics. The superpanels have then been split into multiple smaller panels (GRPs). This maximises the ability to identify the most suitable peer reviewers for each application within the constraints of the GRP process. For more explanation, see the New Grant Program 2019: Peer Review Factsheet.

In 2019 for the first round of Ideas Grants, the peer review process was as follows:

- groups of 150-200 applications were allocated to 15 superpanels of potential peer reviewers

- potential peer reviewers provided declarations of conflicts of interest (COI) and suitability to review each application allocated to their superpanel

- based on these declarations, superpanels were split into a total of 39 discipline-based GRPs (such as Cancer Biology or Indigenous Health) and 75-100 applications were assigned to each GRP of about 15 members

- each application was independently assessed by four GRP members (spokespersons) who provided scores against each assessment criterion

- mean reviewer scores were used to produce a single ranked list of applications

- from this list, the top third of applications were short-listed (about three times the expected funded rate) and the remainder were deemed to be not for further consideration (NFFC)

- short-listed applications were considered at face-to-face GRP meetings in Canberra, with all members scoring each one of their panel’s applications

- funding recommendations were developed from the ranked list of GRP scores

- applicants were provided with mean scores for each assessment criterion without written feedback; data on the distribution of scores for all applications were also provided for benchmarking within the round.

How is peer reviewer suitability determined?

Potential reviewers are provided with the application summary (project title, research team, administering and participating institutions, research classifications and synopsis) and asked to indicate whether they are confident in their ability to understand and assess the proposal against the assessment criteria. Four options are offered: Yes, Moderate, Low, No.

Feedback received through the Ideas Grants Panel Member Survey at the end of the round indicated that (for 367 respondents):

- 51% agreed or strongly agreed that applications assigned to them as spokesperson matched their areas of expertise (see Appendix A)

- 77% agreed or strongly agreed that they had sufficient time to prepare the spokesperson assessments

- 68% agreed or strongly agreed that the peer review workload for the scheme was reasonable

- most felt that the process was very much or somewhat transparent (65%), independent (86%), rigorous (71%), fair (54%), high quality (67%) and gender neutral (75%).

Comments from members who disagreed with these statements mostly reflected concerns about (1) assignment of applications to spokespersons with limited suitability, (2) the breadth of disciplines covered by their GRPs, so that members had insufficient expertise to review all applications, and (3) high workload and insufficient time to prepare. The following comments are examples.

The panels are far too broad, and encompassed many areas outside my expertise. Such panels rely on there being at least one expert for each grant - the opinion of the one individual may then sway the remaining non-experts on the panel. (Peer reviewer, Ideas Grants 2019)

The panel and grants were too diverse. ….That means that no grant is evaluated by a "panel", it's simply evaluated by the few experts in the room, and everybody else has to decide how much they trust those experts. (Peer reviewer, Ideas Grants 2019)

It was a very good idea to assign applications to four spokespersons from the panel rather than invite reviews from external assessors. However, the applications will receive a more balanced review if at least two of the four spokespersons have expertise in the area of the application, particularly the primary spokesperson. (Peer reviewer, Ideas Grants 2019)

Not all comments were negative.

I think the panel I was part of had complimentary (sic) expertise. This made for robust and fair discussion of each application from a range of perspectives. Overall I think the make up of my panel was excellent. (Peer reviewer, Ideas Grants 2019)

...I do think there are also strengths associated with having panels review a wide range of applications, which are not necessarily in the members' area of core experience. It avoids "old-boys'-networks". But it does definitely increase the workload for the panel members. (Peer reviewer, Ideas Grants 2019)

Analyses of the impact of panel discussions on application scores in the 2019 Ideas Grant and Investigator Grant rounds are presented in Appendix B.

For Ideas Grants, the data showed that spokespersons most commonly reduced their scores for an application (usually for a single criterion by a single point) and the remainder of the panel most commonly reduced the application score further as a result of discussion at a GRP meeting. As a result, average spokesperson scores declined for ~58% of applications and final scores declined for 75% of applications compared with average pre-GRP spokesperson scores. For most of the remaining applications (37%), the average spokesperson score increased at the GRP meeting; however, despite the generally higher suitability of spokespersons than other panel members for each grant, fewer grants received a higher final score from the panel as a whole (25%).

An alternative approach to panel discussion was trialled in the 2019 Investigator Grant round – discussion by exception. Few applications were nominated for discussion and the impact on funding outcomes was modest (affecting 0.6% of applications) for the extra burden on reviewers and the time added to the review process.

The 2019 experience with Ideas Grants highlighted the following points:

- For this large scheme covering diverse fields of research, the need to group reviewers with applications to form a manageable number of GRPs constrained the selection of the most suitable spokespersons for each application.

- It also meant that some GRP members may have had limited expertise to review and discuss many applications assigned to their panel.

- Discussion at a GRP meeting changed the score of most applications compared with the scores given by the four spokespersons pre-GRP, most frequently lowering the score. Even when average spokesperson scores increased at the GRP meeting, final panel scores were generally lower than the revised average spokesperson score.

3. 2020 Ideas Grants – peer review in the year of COVID-19

In 2020, GRP meetings were removed from the peer review process for the Ideas Grant scheme because of the COVID-19 pandemic. This change significantly reduced the workload for peer reviewers and saved about 3 months, enabling the release of outcomes in 2020. By eliminating the need to group reviewers and applications into GRPs, there was more flexibility to match four suitable reviewers to each application.

In early 2020, there was widespread concern that it would be impossible to deliver a major grant scheme during the pandemic because applicants, peer reviewers, and both institutional and NHMRC staff would be affected by illness, caring responsibilities, changed professional roles (such as front-line clinical and public health activities) and closure of workplaces.

As the Ideas Grant round was already underway during the first pandemic wave, NHMRC extended the submission deadline from early May to mid-June to give applicants more time and removed GRP meetings from the process to maximise the chance of delivering outcomes by the end of the year (see CEO Communique, May 2020. Many peer reviewers withdrew during 2020 due to competing demands. Nevertheless, thanks to the extraordinary efforts of 585 reviewers to deliver almost 12,000 individual Ideas Grant reviews, outcomes were released under embargo on 30 November 2020.

GRP meetings add about 3 months to the process for a large scheme like Ideas Grants (see GRP workload below for more detail). For this reason, outcomes of the 2020 Ideas Grant round would not have been available until February/March 2021 if GRP meetings had been held.

The removal of GRP meetings provided an opportunity to trial an ‘application-centric’ approach to the selection of peer reviewers in the 2020 Ideas Grant round. Without the need to group applications and reviewers into GRPs (the ‘panel-centric’ approach used in 2019), applications were matched to reviewers from a bigger pool, providing more options to optimise their suitability. For more information about this process, see Appendix A.

The following process was used:

- groups of about 120-270 applications were allocated to 15 superpanels of potential peer reviewers

- potential peer reviewers provided declarations of COI and suitability to review each application allocated to their superpanel

- each application was assigned to four reviewers based on their COI/suitability declarations and each reviewer was allocated 25-30 applications

- reviewers provided scores against each assessment criterion (no written comments) and reviewed budget requests, proposing specific adjustments in some instances

- budgets flagged for attention by reviewers were reviewed by NHMRC staff scientists and adjusted where appropriate

- mean reviewer scores were used to produce a single ranked list of applications from which funding recommendations were developed

- applicants were provided with mean scores for each assessment criterion without written feedback; data on the distribution of scores for all applications were also provided for benchmarking within the round.

Feedback received through the Ideas Grants Panel Member Survey at the end of the round indicated that (for approximately 300 respondents):

- 75% agreed or strongly agreed that applications assigned to them as spokesperson matched their areas of expertise (see Appendix A)

- 73% agreed or strongly agreed that they had sufficient time to prepare the spokesperson assessments

- 64% agreed or strongly agreed that the peer review workload for the scheme was reasonable

- some/most felt that the process was very much or somewhat transparent (47%), independent (77%), rigorous (52%), fair (38%), high quality (48%) and gender neutral (46%)

- 75% considered that the removal of GRPs very much (39%) or somewhat (36%) affected their ability to provide a thorough assessment of the applications assigned

- when asked which element was more important for the robustness and fairness of peer review, 56% favoured GRP meeting discussions and 44% favoured maximising the expertise matching of reviewers to applications.

Common concerns expressed by members were:

- lack of transparency and accountability without GRP meetings or written feedback

- the value of discussing applications at GRP meetings, especially for new reviewers or those with less relevant expertise, to enable consistency of scoring and management of outlier scores

- the workload involved in suitability/COI declarations and reviewing large numbers of applications.

GRP meetings allow all scoring to be justified and disagreements to be considered and balanced. (Peer reviewer, Ideas Grants 2020)

The panel discussions are very useful to talk through the grants - the shared perspectives can have a big impact on the scoring (e.g. one individual may spot a significant weakness in the grant that others can then attest to, thus reducing the score or a strength overlooked by others may be proposed by a panel member and generate robust discussion resulting in an increased score and the important work funded). I would strongly encourage that panels remain an integral part of the review process. (Peer reviewer, Ideas Grants 2020)

Panels can monitor rogue assessors. (Peer reviewer, Ideas Grants 2020)

On the other hand, many members commented that the absence of GRP meetings helped them to manage workloads and other responsibilities, including parenting.

Not needing to attend panel meeting was beneficial to my family commitments and schedule. (Peer reviewer, Ideas Grants 2020)

The absence of the meetings allowed me to participate from interstate, otherwise I would have been excluded, so thank you. (Peer reviewer, Ideas Grants 2020)

Some noted that they were confident in their scoring without discussion and that GRPs can be dominated by individual panel members.

Views of dominant members of GRPs can overly influence other scores. (Peer reviewer, Ideas Grants 2020)

I do not think the panels provide good input for the time and expense. None of the panel comments are provided to applicants. The 2 panels I was part of in previous years were heavily influenced by 1 or 2 outspoken individuals who wanted their views heard. At least without panel, people are able to do their own individual assessments without influence. (Peer reviewer, Ideas Grants 2020)

I thought having no panel was an improvement. I don't think it is necessary to discuss grants in person, I think most people can do scoring properly if trained. (Peer reviewer, Ideas Grants 2020)

Many thought it would be ideal to combine application-centric reviewer selection with retaining GRP meetings. (It is important to note that this would only be possible if each combination of spokespersons comprised its own GRP, membership of which would overlap with other GRPs. If most applications had a unique combination of spokespersons, the number of GRPs would approach the number of applications and conventional meetings would not be feasible.)

BOTH - the system is open to rorting as no justification is required where panel is missing and panel discussions are very helpful in refining the scores. BUT - panel is heavy influenced by loud, unchecked personalities so bias there anyway. Either way - the best grants typically get funded. (Peer reviewer, Ideas Grants 2020)

Panel can help to reach fair outcome but it can also distort it if individual panel members "dominate" the discussion. There are arguments to support and oppose both solutions (even if we assume that the same reviewers would review an application) but the fact that without panels the reviewers are more likely to be experts outweighs the disadvantages of the lack of the panel discussion. (Peer reviewer, Ideas Grants 2020)

Both NHMRC’s data (based on reviewers’ declared suitability before peer review) and responses to the Panel Member Survey suggested better matching of reviewers to applications in 2020 than in 2019 (Appendix A).

The 2020 experience with Ideas Grants highlighted the following points:

- Without the need to form GRPs, the matching of suitable peer reviewers to applications was markedly improved.

- The lack of either GRP meetings or written feedback from reviewers reduced the transparency and accountability of the peer review process.

- Many reviewers were concerned about the removal of GRPs while others noted the advantages of independent scoring and increased ability to participate in peer review.

2021 Ideas Grants

1. Peer review process in 2021

Important changes will be made to the peer review process for Ideas Grants in 2021:

- an application-centric process will be used to select peer reviewers, optimising the matching of reviewer expertise to each application

- the number of reviewers sought for each application will be increased from four to five

- reviewers will provide written comments against each assessment criterion, in addition to numerical scores and benchmarking data as previously provided

- GRP meetings will not be held.

Application-centric reviewer selection means that the five best available reviewers will be identified for each application, where ‘best’ means that suitability is maximised while avoiding COI. Compared with panel-centric reviewer selection required to form GRPs (as in 2019), this approach increases flexibility in the choice of reviewers. As each application can have a unique combination of reviewers, there is better matching of reviewers to individual applications and better use of reviewer expertise (Appendix A).

The peer review process will be as follows:

- potential peer reviewers will be identified by NHMRC and asked to provide declarations of suitability and COI against a set of applications

- each application will be assigned to five reviewers based on their suitability/COI declarations, with most reviewers assigned 20-25 applications where possible to balance workloads and assist their benchmarking

- each application will be independently assessed by the five reviewers who will provide scores and brief written comments against each assessment criterion

- any budgets flagged for attention by reviewers will be reviewed by NHMRC staff scientists

- funding recommendations will be developed from the ranked list of the combined scores of the independent reviewers

- applicants will receive mean scores for each assessment criterion, their mean total score, overall category score and written comments from assessors.

The introduction of written feedback to applicants in the 2020 Investigator Grant round was well received with 70% of respondents to the Peer Reviewer Survey reporting that summary statements very much or somewhat improved the fairness/robustness of peer review. Comments highlighted the importance of reviewers justifying their scores and the value of feedback for the applicant.

Although the workload for each reviewer is substantial, we hope that the streamlining of peer review to the single-step process outlined here will make it easier to engage a large group of suitable reviewers.

Additional support will also be available to peer reviewers in 2021:

- a small number of experienced peer reviewers will serve as Mentors to provide one-on-one advice on peer review issues (but not scientific issues or individual grants)

- peer reviewer training will be improved – NHMRC is currently seeking feedback from 2020 reviewers on preferred mechanisms to provide improved training (e.g. webinar, video)

The decision to use independent assessments without GRP meetings is a very important one. The reasons are outlined in the next section.

2. Rationale for changing Ideas Grant peer review in 2021

The following table presents the main advantages and disadvantages of independent assessments with and without GRP meetings, based on NHMRC’s experience together with formal and informal feedback from applicants, peer reviewers, Research Committee and other advisory committees.

| Independent assessments without GRP meetings | Independent assessments with GRP meetings | |

|---|---|---|

| Advantages |

|

|

| Disadvantages |

|

|

GRP workload

Whether they are held in person or virtually, GRP meetings add about 3 months to the peer review process for a large scheme like Ideas Grants, with the following steps:

- Short-listing and “rescue” – Following receipt of all initial scores from spokespersons, each GRP can review the preliminary ranked list for their panel and ‘rescue’ applications deemed to be NFFC for discussion at the GRP meeting. Once the NFFC and rescue process is complete, the order of discussion is provided to panel members.

- GRP member preparation – Spokespersons prepare to present the strengths and weaknesses of any of their assigned applications that have been shortlisted for discussion. Members must also familiarise themselves with all other applications being discussed by their GRP, not only those they are assigned as a spokesperson.

- Six-week GRP sitting period: in 2019, 39 Ideas Grant GRPs (6-7 per week) each met for about 3 days to discuss and score all their panel’s shortlisted applications.

- Development of a ranked list from GRP scores and quality assurance – Following the GRP meeting, all records and processes from the meeting discussions are thoroughly checked, including follow-up with spokespersons where necessary. Funding recommendations are then prepared.

Feedback from peer reviewers through the Panel Member Survey after the 2019 Ideas Grant round provided the following information on the workload attributable to GRP meetings:

- In addition to the time spent undertaking their initial assessments, 11% of reviewers spent <1 hour, 46% spent 1-2 hours, 31% spent 2-4 hours and 12% spent >4 hours per application preparing for the GRP meeting.

- With 1001 applications short-listed for GRP discussion and assuming an average of 15 members per panel and 2 hours of preparation per member per application, panel members collectively spent about 30,000 hours preparing for GRP meetings.

3. Implementing application-centric selection of peer reviewers for Ideas Grants

Noting the balance of advantages and disadvantages outlined above, NHMRC considers that independent assessments without GRP meetings (allowing application-centric reviewer selection) will improve the quality of peer review of Ideas Grant applications – especially by improving the matching of peer reviewer expertise to applications across all fields and by enabling true independence of peer review.

In 2021, as in 2020, using this approach will also increase flexibility to manage continuing impacts of the COVID-19 pandemic on the peer review process and the likelihood of delivering grant outcomes by the end of the year.

Independent assessment without GRP meetings has already been introduced for Investigator Grants, with five assessors per application and written feedback to applicants. With our experience so far, we think that this approach is especially suitable for schemes that receive large number of applications (1780 for Investigator Grants and 2955 for Ideas Grants in 2020) across the full breadth of health and medical research fields. The disadvantages of GRP meetings (requiring panel-centric reviewer selection) are most obvious for these large schemes because of the challenges of forming panels with appropriate specificity and breadth of expertise. By contrast, NHMRC plans to continue using GRPs for smaller schemes, including the Clinical Trials and Cohort Studies Grant scheme.

In moving to application-centric reviewer selection without GRP meetings for Ideas Grants, there are issues to understand and manage:

- Calibration of scoring between reviewers – how to ensure that all reviewers use the category descriptors and 1-7 scoring range appropriately to reflect their assessment

- The impact of divergent (“outlier”) scores – how to identify and (if appropriate) manage instances where one reviewer scores markedly away from other reviewers, while noting that divergence is not necessarily undesirable or incorrect if it reflects true differences in expert opinion

- Avoiding scoring errors – how to identify and manage scores that do not align with assessor comments.

Several approaches are in place or in development to manage these issues, for example:

- Peer reviewer guidance and training are important ways to foster appropriate use of the scoring matrix. Further guidance is being developed for 2021.

- The assignment of at least 15-20 applications to each reviewer helps them to benchmark application quality and to calibrate their scores.

- Increasing the number of reviewers per application from four to five reduces the impact of a single divergent score.

- NHMRC has established a Peer Review Analysis Committee to advise the CEO on the management of peer review scores, including calibration and divergent scores.

The Peer Review Analysis Committee has now met three times. Members are working closely with the Office of NHMRC on data analysis and modelling of de-identified peer reviewer scores from the Investigator and Ideas Grant schemes to investigate issues such as the impact of divergent scores on outcomes and variations between reviewers in score distribution. We anticipate releasing a report on this work once it is complete. Advice from this committee and from Research Committee will help to inform the management of scores in the future.

In implementing these changes, NHMRC will ask all peer reviewers to participate in a post-review survey as usual to seek feedback on the process used in 2021. The outcomes of the survey will be considered along with analysis of data from the round to evaluate the effectiveness of the new process. NHMRC will also continue to welcome feedback from the research sector on their experience as grant applicants, reviewers and administrators.

Why are we doing this?

NHMRC has two motivations in making these changes to the peer review process for Ideas Grants.

The first is to continue to improve the quality and robustness of peer review.

- While independent assessment followed by a GRP meeting can be highly effective when the expertise of the GRP is well-matched to its set of applications, the process is less robust when matching is more limited.

- By shifting the focus to optimising the matching of reviewers to individual applications, supported by peer reviewer training and written feedback to applicants, we expect that the quality of peer review will be improved for most Ideas Grant applications.

The second is to streamline the peer review process.

- Streamlining the process will reduce the workload for peer reviewers. NHMRC depends on peer reviewers to deliver its grant program and is well aware of the heavy demands that we, other funding agencies, journals and institutions place on researchers to undertake peer review. We want to lessen this load by reducing the length and complexity of the peer review cycle in each application round and by better matching of applications to suitable reviewers – it is easier to review an application in your own field.

- Streamlining the process will also make room for further innovation. During the 2017 consultation on peer review for the new grant program and subsequent discussions, many researchers have called for new and more flexible approaches to peer review for our major schemes. Possibilities include multiple (or even continuous) grant cycles each year, rapid resubmission of near-miss applications and iterative peer review. None of these has been feasible with our traditional multi-step processes, taking more than 6 months per round and with substantial logistical constraints.

We see independent assessment by multiple well-matched reviewers, without the constraints of GRP meetings, as a significant step forward in the design of a rapid, flexible process for expert peer review in this important grant scheme.

Appendix A: Suitability of peer reviewers in 2019 and 2020 Ideas Grant rounds

Background – two approaches to the selection of peer reviewers

Panel-centric peer reviewer selection

- Defined as the allocation of groups of peer reviewers (panels) to groups of applications; reviewers and applications are restricted to a single GRP.

- Selection of peer reviewers may be constrained by:

- availability of suitable reviewers (i.e. with relevant expertise, avoiding COI)

- the need to construct each GRP with suitable reviewers for a large group of applications, usually covering a range of disciplines (because all GRP members score all their panel’s short-listed applications at the GRP meeting, subject to any COI)

- the need to have a manageable number of GRPs for scheduling meetings

- the need to assign a minimum number of applications to each reviewer as a spokesperson to enable benchmarking during their initial independent review before short-listing

- the need to balance workloads between reviewers

- the need to balance peer reviewer gender, state and institution for each GRP, as well as over the whole reviewer group for the grant round.

Application-centric peer reviewer selection

- Defined as the allocation of peer reviewers to individual applications; each application in a grant round can have a unique set of reviewers.

- Selection of peer reviewers may be constrained by:

- availability of suitable reviewers (i.e. with relevant expertise, avoiding COI)

- the need to assign a minimum number of applications to each reviewer to enable benchmarking

- the need to balance workloads between reviewers

- the need to balance peer reviewer gender, state and institution for applications individually and as a whole for the grant round.

Selection of peer reviewers in 2019 and 2020 Ideas Grant rounds

Potential peer reviewers were identified from self-nominations and awardees of 2018 Project Grants and 2019 Ideas Grants and based on specific expertise requirements.

All potential peer reviewers were asked to screen a large set of grant applications on their assigned superpanel (based on discipline groupings) and to declare for each application:

- their suitability to review it (Yes/Moderate/Limited/No)

- whether they had any conflicts of interest with it.

With the aim of maximising suitability and minimising conflicts of interest, these declarations were used for:

- panel-centric review in 2019: groups of applications were matched to groups of reviewers (GRPs) and then each application was assigned to four spokespersons on the relevant GRP for the initial assessment (pre-NFFC)

- application-centric peer review in 2020: with the decision not to hold GRP meetings because of the pandemic, a form of application-centric review was used in which each application was assigned to four peer reviewers using the declarations made for the larger superpanel grouping of applications and assessors.

| Parameter | 2019 | 2020 |

|---|---|---|

| Applications received | 2739 | 2995 |

| Peer reviewers approached to provide declarations | 736 | 602 |

| Peer reviewers selected for assessment1 | 568 | 585 |

| Number of applications per spokesperson/peer reviewer2 | 18-22 | 25-30 |

| Number of applications per GRP (after NFFC) | 23-31 | |

| Number of reviewers per GRP | 13-16 | Not applicable |

| Number of reviews received per application | 4 reviews: 97.6% 3 reviews: 2.4% | 4 reviews: 99.4% 3 reviews: 0.6% |

| Total number of reviews received | 10868 | 11954 |

- Reviewers were selected taking into account the need to assign a minimum number of applications to each reviewer based on the declarations provided.

- A few reviewers received smaller numbers of applications (usually because of niche expertise).

| Declared suitability | 2019 | 2020 |

|---|---|---|

| Yes | 4101 (37.7%) | 8095 (67.7%) |

| Moderate | 5744 (52.9%) | 3824 (32.0%) |

| Limited | 1020 (9.4%) | 34 (0.3%) |

| No | 3 (0.03%) | 1 (0.01%) |

| Total | 10868 | 11954 |

All Ideas Grant peer reviewers were invited to complete a survey after they had completed peer review in 2019 and 2020. In both years, reviewers were asked how well the applications assigned to them matched their expertise.

| Parameter | 2019 | 2020 |

|---|---|---|

| Number of peer reviewers | 568 | 585 |

| Q21: To what extent do you agree with the following statement: In general, the applications assigned to me as Spokesperson (2019)/assessor (2020) matched my area/s of expertise. | ||

| Number of respondents | 367 | 303 |

| Strongly Agree | 28(7.6%) | 63(20.8%) |

| Agree | 161(43.9%) | 163(53.8%) |

| Neither agree nor disagree | 80 (21.8%) | 49 (16.2%) |

| Disagree | 76 (20.7%) | 24 (7.9%) |

| Strongly disagree | 22 (6.0%) | 4 (1.3%) |

| Q33: If you were also an assessor in the 2019 Ideas Grants round, was the suitability of applications assigned to you in 2020 overall better compared with the suitability of applications assigned to you in 2019. | ||

| Number of respondents | Not applicable | 300 |

| Yes | 136 (45.3%) (66.3%) | |

| No | 50 (16.7%) (24.4%) | |

| Not applicable | 95 (31.7%) (n/a) | |

| Unsure | 19 (6.3%) (9.3%) | |

Conclusion: Both NHMRC’s data (based on reviewers’ declared suitability before peer review – Table 2) and the survey data (based on respondent reviewers’ general perception after peer review – Table 3) suggest better matching of applications to peer reviewers in 2020 using application-centric reviewer selection than in 2019 using panel-centric reviewer selection.

Appendix B: Impact of Grant Review Panel meetings on final scores

Data are presented here on the impact of panel discussion on scores in the 2019 Ideas Grant and Investigator Grant rounds.

Impact of GRP meetings on final scores in the 2019 Ideas Grant round

The 2019 Ideas Grant round used a two-step process with panel-centric reviewer selection. In the first step, each application was scored independently by 3-4 reviewers (spokespersons). In the second step, each short-listed application was discussed by the full GRP at a face-to-face meeting. All GRP members, including spokespersons, scored the application during the meeting.

Of the original 2739 applications, 1001 were discussed at a GRP meeting; these applications had received a total of 3973 independent assessments before the GRP meeting.

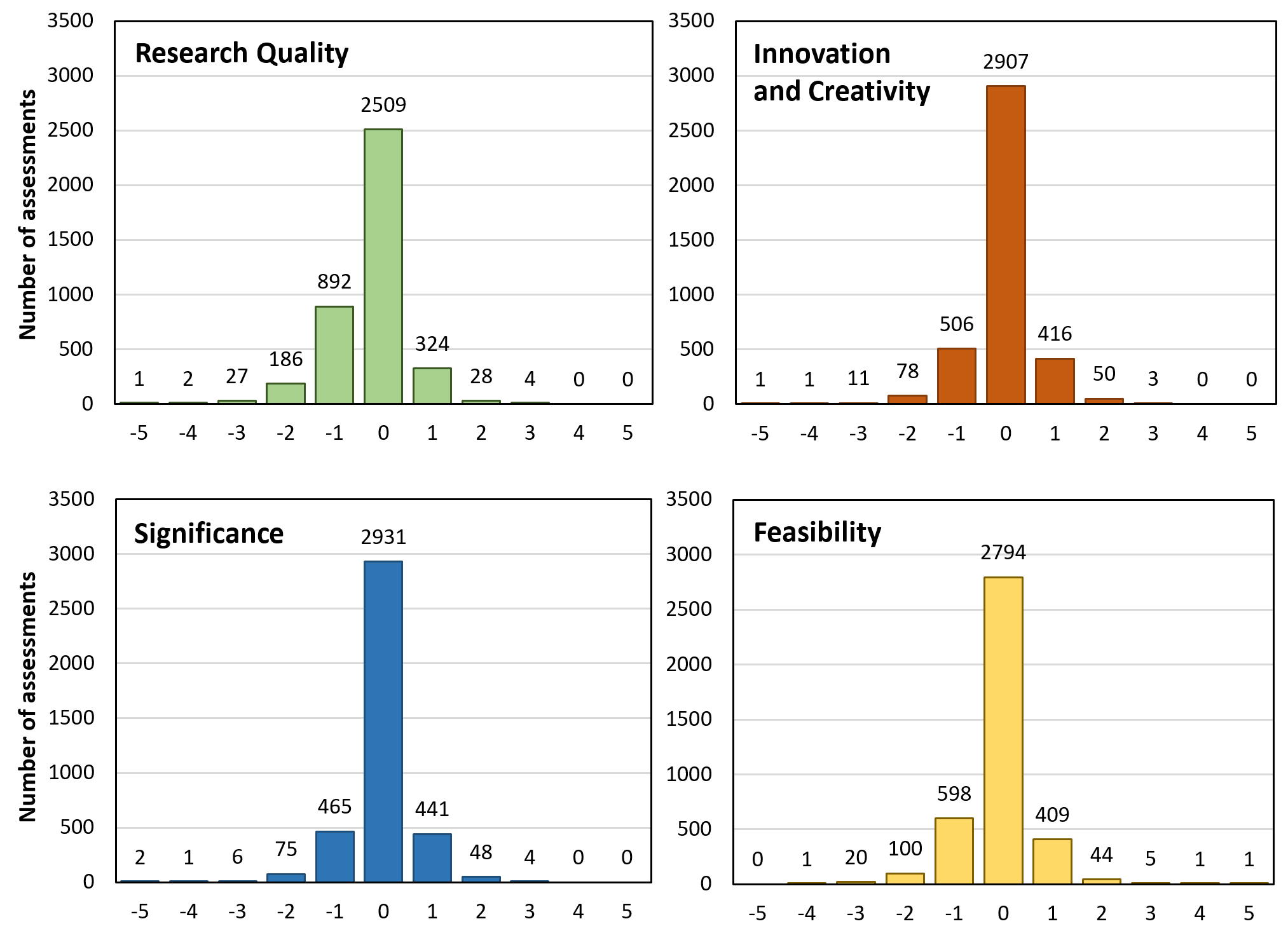

The figure below shows the difference between spokesperson (SP) scores before and after discussion at a GRP meeting for each of the assessment criteria (Research Quality [35%], Innovation and Creativity [25%], Significance [20%] and Feasibility [20%]) for all short-listed applications (n=1001).

The table below shows the number of criteria for which spokespersons changed their scores as a result of discussion at a GRP meeting.

| Parameter* | Number of criteria for which score was changed | |||||

|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | Total | |

| Number of spokesperson assessments | 1,380 | 1,231 | 778 | 372 | 212 | 3,973 |

| Percentage of spokesperson assessments | 34.7% | 31.0% | 19.6% | 9.4% | 5.3% | 100% |

*Incomplete assessments were not included in this analysis.

Together the data in Figure 1 and Table 2 show:

- Most spokespersons did not change their scores for a given criterion as a result of discussion at a GRP meeting.

- When they did change their score, they most commonly changed it for a single criterion and by one point.

- Spokespersons more commonly decreased than increased their scores as a result of discussion at a GRP meeting.

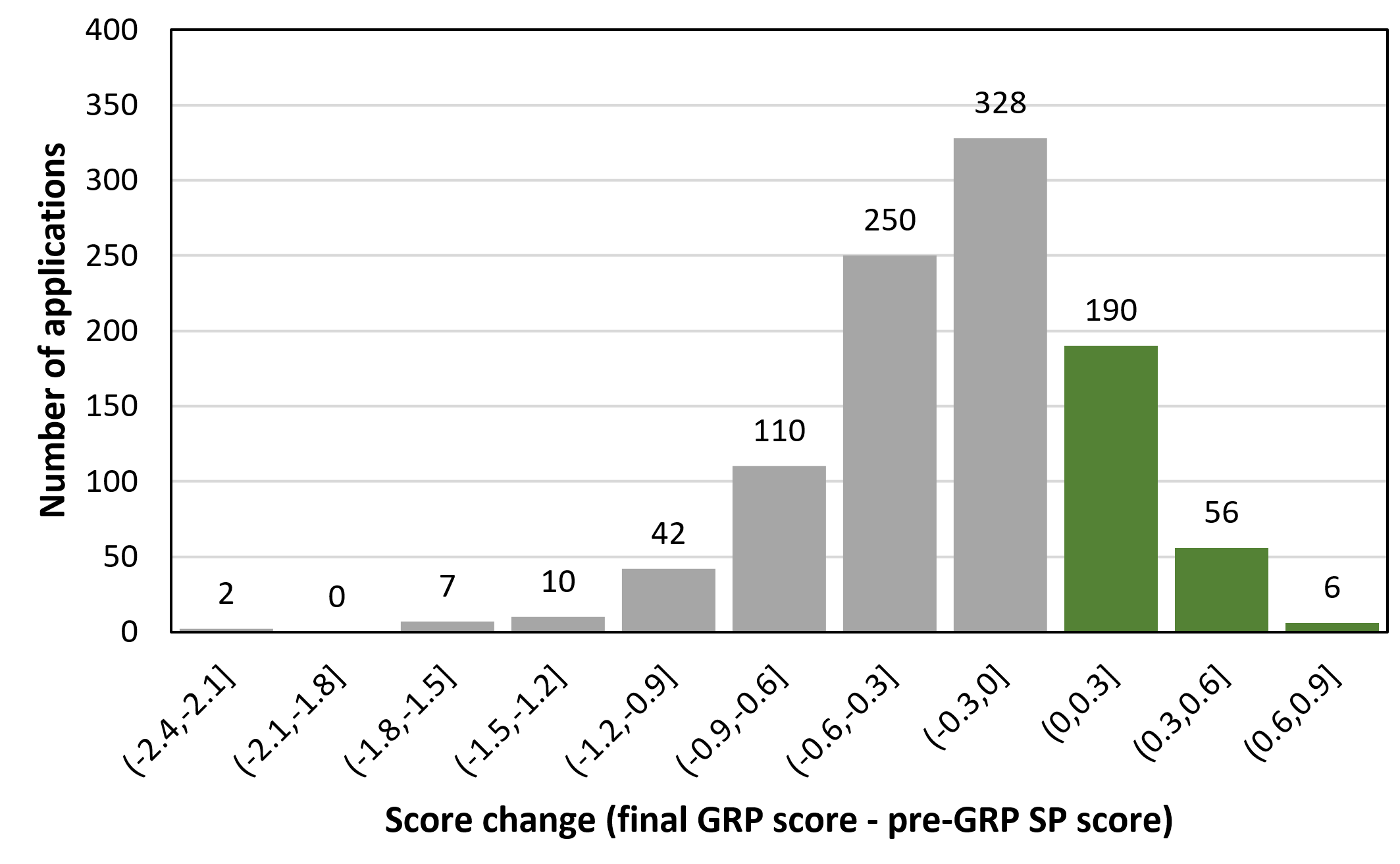

The figure below shows the difference between the average spokesperson score for each short-listed application before and after the GRP meeting (n=1001). The green shading shows applications whose average spokesperson score increased at the GRP meeting.

The data show:

- Final spokesperson scores were lower than their pre-GRP scores by an average of 0.329 for 58.3% of the applications discussed at a GRP meeting.

- Final spokesperson scores were higher than their pre-GRP scores by an average of 0.198 for 36.9% of the applications discussed at a GRP meeting.

- Scores for the remaining 4.8% of applications remained unchanged as a result of discussion at a GRP meeting. (Of the 48 applications whose scores were unchanged, there were 30 for which none of the spokespersons changed their scores for any criterion and 18 for which score changes by different assessors cancelled each other out.)

The figure below shows the difference between the average spokesperson score for each short-listed application before the GRP meeting and the final application score provided by the whole panel (3-4 spokespersons and about 11 other members) at the GRP meeting. The green shading shows applications whose average score increased at the GRP meeting.

The data show:

- Final scores from the whole panel were lower than pre-GRP spokesperson scores by an average of 0.415 for 74.7% of the applications discussed at a GRP meeting.

- Final scores from the whole panel were higher than pre-GRP spokesperson scores by an average of 0.209 for 25.2% of the applications discussed at a GRP meeting.

- Scores for the remaining one (0.1%) application remained unchanged as a result of discussion at a GRP meeting.

Taken together, Figures 2 and 3 show that discussion at GRP meetings most commonly led to spokespersons lowering their scores and that other GRP members most commonly amplified this effect by lowering the scores further. However, when the spokespersons increased their scores at the GRP meeting, other panel members did not invariably follow and fewer grants received a higher score from the whole panel than from the spokespersons.

The impact of these score changes on funding outcomes is difficult to measure because of: (1) the inclusion of rescued applications in the shortlist discussed at GRP meetings, (2) the post-GRP removal of applications that became ineligible when applicants were awarded an Investigator Grant, and (3) the fact that not all budgets are discussed at the GRP meeting while others have their budgets reduced, allowing additional applications to be funded.

Impact of Discussion by Exception in the 2019 Investigator Grant round

“Discussion by Exception” was trialled in the first round of the Investigator Grant scheme in 2019. It is an alternative approach to GRP meetings in which only those applications that require discussion are considered by a panel. In principle, depending on how many applications are discussed, this approach can be implemented even when application-centric reviewer selection is used and each application has a unique group of reviewers.

In the 2019 Investigator Grant round, five independent assessments were sought for each of the 1857 applications received; 87% received five and the remainder received four assessments. Each reviewer was assigned 24-39 applications.

Once they had seen the aggregated independent scores from all reviewers for their assigned applications, reviewers could nominate a maximum of two applications for discussion by exception. The panel of reviewers for each nominated application then met by videoconference with an independent chair and a community observer. The reviewer who nominated the application opened the discussion with the justification for their nomination. Following discussion, reviewers had the opportunity to re-score the application.

The outcomes were as follows:

- Of the 295 reviewers, 73 (24.7%) nominated applications for discussion; 29 reviewers nominated one and 44 nominated two applications.

- 105 applications (5.7%) were nominated for discussion; 95 were nominated once, 8 were nominated twice and two were nominated by three reviewers.

- Of the 240 reviewers who participated in a panel meeting, 53 re-scored a nominated application.

- 283 scores for individual assessment criteria were changed, representing 0.5% of all scores and affecting 73 applications (69.5% of applications discussed; 3.9% of all applications received).

- Re-scoring changed the funding outcome for 11 applications (10.5% of applications discussed and 0.6% of all applications received).

- In total, discussion by exception added about 7 weeks to the peer review process.

Of 185 respondents to the Peer Reviewer Survey, 42% agreed or strongly agreed that Discussion by Exception was an important part of the peer review process. Among concerns noted was the inequity of discussing and re-scoring only selected applications.

Conclusion: Few applications were nominated for discussion. Although most were re-scored, Discussion by Exception had a modest effect on funding outcomes for the extra burden on reviewers and the time added to the review process.

Figure 1 Description

| SP score change | Number of assessments |

|---|---|

| -5 | 1 |

| -4 | 2 |

| -3 | 27 |

| -2 | 186 |

| -1 | 892 |

| 0 | 2509 |

| 1 | 324 |

| 2 | 28 |

| 3 | 4 |

| 4 | 0 |

| 5 | 0 |

| SP score change | Number of assessments |

|---|---|

| -5 | 1 |

| -4 | 1 |

| -3 | 11 |

| -2 | 78 |

| 1 | 506 |

| 0 | 2907 |

| 1 | 416 |

| 2 | 50 |

| 3 | 3 |

| 4 | 0 |

| 5 | 0 |

| SP score change | Number of assessments |

|---|---|

| -5 | 2 |

| -4 | 1 |

| -3 | 6 |

| -2 | 75 |

| 1 | 465 |

| 0 | 2931 |

| 1 | 441 |

| 2 | 48 |

| 3 | 4 |

| 4 | 0 |

| 5 | 0 |

| SP score change | Number of assessments |

|---|---|

| -5 | 0 |

| -4 | 1 |

| -3 | 20 |

| -2 | 100 |

| 1 | 598 |

| 0 | 2794 |

| 1 | 409 |

| 2 | 44 |

| 3 | 5 |

| 4 | 1 |

| 5 | 1 |

Figure 2 description

| Score change (final SP score - pre-GRP SP score) | Number of applications |

|---|---|

| (-2.3, -2.1] | 1 |

| (-2.1, -1.8] | 1 |

| (-1.8, -1.6] | 1 |

| (-1.6, -1.4] | 5 |

| (-1.4, -1.2] | 3 |

| (-1.2, -0.9] | 17 |

| (-0.9, -0.7] | 32 |

| (-0.7, -0.5] | 83 |

| (-0.5, -0.2] | 162 |

| (-0.2, 0.0] | 327 |

| (0.0, 0.2] | 247 |

| (0.2, 0.5] | 93 |

| (0.5, 0.7] | 24 |

| (0.7, 0.9] | 5 |

Figure 3 description

| Score change (final SP score - pre-GRP SP score) | Number of applications |

|---|---|

| (-2.4, -2.1] | 2 |

| (-2.1, -1.8] | 0 |

| (-1.8, -1.5] | 7 |

| (-1.5, -1.2] | 10 |

| (-1.2, -0.9] | 42 |

| (-0.9, -0.6] | 110 |

| (-0.6, -0,3] | 250 |

| (-0.3, 0] | 328 |

| (0, 0.3] | 190 |

| (0.3, 0.6] | 56 |

| (0.6, 0.9] | 6 |